Rethinking Academic Excellence in the Age of AI

This week, my 3rd grade son was working on a presentation on Greek myths. He had to make a tri-fold poster on Zeus, king of the Greek gods, and when it came time to find a picture, we decided it might be fun to create one with AI. It became a family activity — one that reveals magical opportunities and real limits of generative AI for all grade levels. It also became a clarifying moment for what it means to teach in the age of generative AI.

Zeus and Cubism

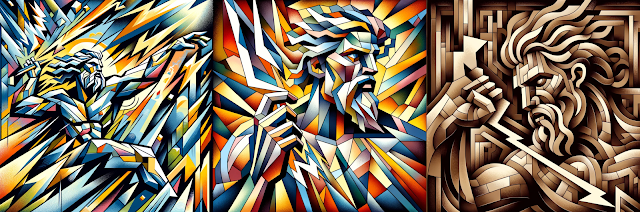

Our prompts were almost always simple. We started with “Please make me a picture of Zeus.” The first outcomes appeared in the style of a fantasy novel: stereotypical, bearded, and muscled. In our first few attempts, we tried specifications for details like how to hold a lightning bolt:

Our son loves soccer, so we tried some other formats: “imagine he is a soccer player” wearing the school uniform and “make it more kid-friendly”:

My wife had the idea to ask for a cubist Zeus. We learned that ChatGPT can’t do cubism. Make it more abstract, we instructed it. Try it all in shades of brown:

These didn’t work, so we took a brief detour to try to teach ChatGPT cubism. “Draw a face in the cubist style,” we typed. Not quite. But we saw some incremental progress with more explicit direction: “Show multiple perspectives of specific parts of the face, so we might see the nose from both sides.” This moved the AI in the right direction, but it still couldn’t quite capture or replicate the abstract style:

We returned to Zeus and third grade. How about Zeus in the style of stencil graffiti like Banksy? Or a Renaissance painting? Or a French impressionist? Not bad. Now we were getting somewhere interesting:

And more to grade level, we tried: a crayon sketch, a Minecraft character, or a teenage version of Zeus as a cartoon character:

ChatGPT’s responses were astonishing in their range. All this took place in less than ten minutes. In that time, it produced complete works drawn from nearly a dozen styles.

Awash in the Generic, We Still Need Artists

Prompts might push image subject matter towards new ideas, but generative AI’s statistically-driven mode of creativity pushes style and composition back to the average. Novelty in content, but normalcy in form.

Peter Nilsson Tweet

A Boon to Those Who Struggle

Writing and School

Writing is undergoing the same commodification as visual art. So why teach it?

We write and teach writing for two reasons: we write to explain and we write to explore. Writing to explain is the domain of analytical essays, lab reports, term papers, précises, and other writing for which we learn to organize and lay out our thinking. Writing to explore is the domain of journal entries, response papers, rough drafts, and other writings that serve the purpose of helping us surface, sort out, and develop our unformed thoughts.

We write and teach writing for two reasons: we write to explain and we write to explore.

Peter Nilsson Tweet

Excellence: Cubism, Novelty, and Breaking Boundaries

Making Meaning, Redefining Excellence, and How Teachers Can Move Writing Beyond AI

Peter Nilsson is a Senior Consultant with Aptonym.

His experience includes independent school leadership and teaching positions, among other innovative roles focused on innovation in education. He is the founder of Athena, a resource and collaboration hub for teachers, and is the editor and curator of the newsletter The Educator’s Notebook. Peter also serves on the Advisory Board for SXSWedu and the Center for Curriculum Redesign.

In his own words, Peter is “an educator committed to learning and making the world a better place.” Connect with Peter on LinkedIn.

This post was originally published in its entirety on Sense and Sensation.